Yesterday we had a small demo at TWC with our first concepts and prototype. We started with talking about our activities of the last two weeks and our plan of actions.

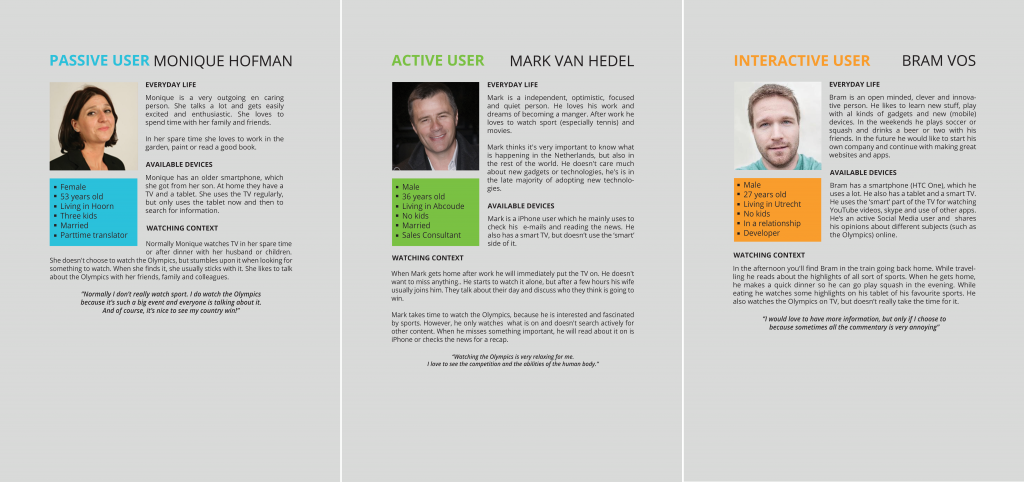

After this, we started with discussing our personas. In the beginning of last week, we did some user interviews regarding the Olympics. We asked them about viewing habits, which devices they used and why they watched the Olympics. We got some useful insights and decided to make some personas based on these results.

(click on the picture for a larger version)

While discussing the personas, Rutger (TWC) mentioned we didn’t cover younger people like teenagers. He thought this might be an interesting age group as well and that they are our future users.

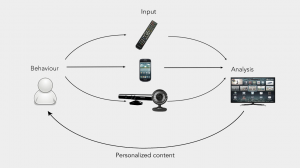

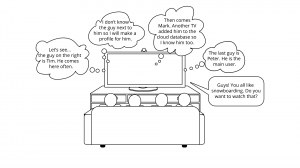

After the feedback we showed our first concept. This concept revolves around smart TV’s who are learning from your behaviour and actions. When the TV ‘sees’ you’re bored while watching soccer, it will save this in your personal profile. Next time soccer is on, it will not notify you.

(click on the pictures for a larger version)

It could also be possible that you can connect with your other devices. If you’re looking for more information on Wikipedia for example, your TV will recognize this and think that you’re interested in this subject. It will remember this and save it in your personal profile.

Before we presented this concept, we discussed if users wanted to be scanned and checked all the time. The team of TWC also mentioned this. They thought that the TV producers will not keep on devolving tools revolving around the use of camera’s in smart TV. This is something we will have to research soon.

They also mentioned that the Olympics only last 2 weeks, which might not be long enough to learn the user habits. It also might be hard to ‘read’ someone’s behaviour. If someone is relaxing on the couch and slowly falls asleep does this mean he is bored and dislikes the sport? Or does this mean he is just relaxing? Usually it will take a lot of time to get to know someone’s behaviour, let alone get conclusions out of this in just a couple of weeks. The concept was really good, but in order for it work well, a well thought recommendation system needs to be developed.

In the second concept we wanted to let the user take control. The user can choose their own camera angle and can even zoom in. The user can also ask for more information by pushing (or clicking) a button for more information. If this layover is activated you will see ‘points of interest’ where you can choose from. If you choose one you will get more information on this POI.

(click on the pictures for a larger version)

The problem with this concept is that it will be hard to implement it with live tv. You will need to find a way to get all kinds of live meta data. There is no time to manipulate or curate the information.

The last concept also gave more information to the user. On the right side of the screen you will see some kind of live feed where you can choose all kind of information. (Over here, you can also see our interactive prototype)

(click on the pictures for a larger version)

TWC wasn’t that enthusiastic about this concept. It wasn’t innovative enough because everybody is already doing this for a couple of years. We need to think more out of the box, include multiple devices and don’t try to put everything on the big screen.

In the upcoming weeks we need to focus on what is happing right now (take it as starting point), how we are going to gather metadata, content and avoid thinking about advertisers etc.